I Participated in Facebook's "High Court" Roundtable, and I'm Worried for the Freedom of Speech

June 17, 2019

By Mike Brownfield

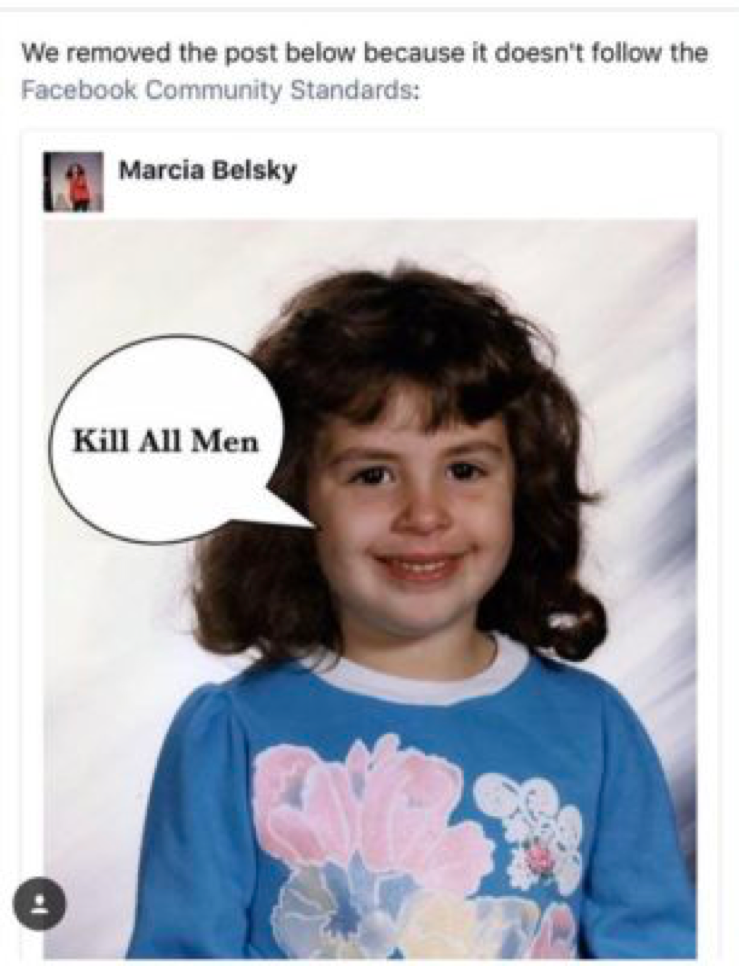

“Kill All Men”

The words appeared on a Facebook post in a cartoon bubble beside the smiling face of a cherubic young girl. Is this hate speech that should be censored? Or is it satire that should be allowed to stand?

I sat at a table in Norman, Oklahoma, to debate this question as part of a roundtable discussion on Facebook’s proposed independent oversight board, which would be tasked with deciding whether to censor posts like these. Facebook invited me to participate on behalf of the Goldwater Institute and offer a conservative’s perspective on their plans.

It was my first visit to the Great Plains, and it seemed to be the most un-Silicon Valley of locations for a conversation with the Big Tech Behemoth. But I suppose that was by design. Facebook has held similar listening sessions all around the world — in Madrid, Warsaw, Brussels, Istanbul, Dubai, Tel Aviv, and Johannesburg, among others. Until Norman was added to the list, America’s flyover country hadn’t been consulted in this fashion.

Facebook’s team presented their vision for the oversight board, which has been described by some as a “Supreme Court” or “High Court” of content. Up to 40 individuals from around the globe with expertise in “diverse disciplines and a commitment to safety and free expression” would be selected (by whom is yet to be determined) to decide Facebook’s “most difficult and contested decisions about taking down or leaving up content,” including issues of “artistic expression, suspected hate speech or bullying.” The board would choose which cases to decide, gather and consider facts, (potentially) rely upon the Universal Declaration of Human Rights as its standard for the freedom of speech (not the First Amendment), and publish its decisions. This would effectively create case law, set precedent, and define the company’s policy on speech.

The Facebook employees who presented the plan appeared to be genuinely earnest in their mission to solve a problem that has plagued the social media platform: How is it possible to monitor the speech of 2.38 billion active Facebook users who post hundreds of millions of photos, videos, and comments every day? And how is it then possible to accurately understand the meaning of a post or a photo, the intent of the poster, and the context of a post in order to discern whether to allow the speech to stand? To make matters even more difficult, decisions are made according to Facebook’s Community Standards, which are more than 3,000 words long.

By its own account, Facebook already employs 15,000 contractors worldwide to moderate content in over 50 languages at sites including Germany, Ireland, Latvia, Spain, Portugal, the Philippines, and the United States. In total, the company has 30,000 employees dedicated to safety and security. And that’s on top of its artificial intelligence filters, which supposedly are getting even better at catching offensive content before it sees the light of day.

And yet despite those tens of thousands of employees and high-tech filters, the most hideous side of humanity still finds an audience on Facebook — most recently, the horrific terrorist attack in New Zealand which was live streamed and viewed thousands of times before it was removed. Add on top of that concerns about Russia using Facebook to influence U.S. elections, Europe’s move to regulate tech companies, Washington’s investigation of tech’s supposed anti-competitive practices, and continued reports of conservatives being censored on social media, and Facebook clearly has a problem on its hands.

I thought about these issues as I sat in Oklahoma, pondering the absurdity of discussing whether a Facebook post that said “Kill all men” should be censored — especially when, it turns out, the author is a female comedian living in New York City who was making an attempt at satire. Is this the kind of work that Facebook’s Supreme Court of Censorship would be tasked with doing? Parsing a singular post from a comedian in New York to divine whether it’s comedy or hate speech, and how the company’s 3,000-word Community Standards policy and international law would apply in this entirely insignificant situation? How could a board of 40 people be expected to make decisions like these, especially given the massive volume of Facebook posts?

I was getting flummoxed at the futility of the exercise when I asked a Facebook employee whether the true purpose of the board is so that Facebook can punt controversial decisions to an outside party, wash its hands, and disclaim accountability for politically unpopular results. I knew the answer before I asked it. Of course not, she replied. Facebook will still be accountable, and they won’t shy away from difficult decisions. I’m not so sure.

At a town hall discussion earlier in the day, Facebook took questions from the community. A retired teacher stood and commented, “The board must enact policies that protect our democracy and the democratic process that’s enshrined in the U.S. Constitution. Free speech, in my opinion, is not the be-all of the U.S. Constitution. People have a right to accurate information, and not the verifiably false information that you find on Facebook. What policies would you enact and enforce to re-enforce this need for accurate information and the takedown of verifiably false data, including Russian interference?”

The audience applauded.

Facebook’s answer: That’s exactly the type of issue the board could hear and decide upon.

While I can understand why Facebook would prefer to defer difficult decisions to an “oversight” board, the reality is that such a board would simply engage in more censorship, particularly of right-leaning groups. The better course would be for the default position of Facebook (and other social media platforms) to be one that embraces free speech, even when controversial. Of course, as a private company they are free to do as they choose, and if past is prologue, that choice in all likelihood will be to pander to the Left. Despite Facebook’s hat tip to conservatives in Oklahoma, their voices will certainly not be protected by those who would subordinate the freedom of speech to interests all their own.

Mike Brownfield is Communications Director at the Goldwater Institute.

Get Connected to Goldwater

Sign up for the latest news, event updates, and more.

Recommended Blogs

Donate Now

Help all Americans live freer, happier lives. Join the Goldwater Institute as we defend and strengthen freedom in all 50 states.

Donate NowSince 1988, the Goldwater Institute has been in the liberty business — defending and promoting freedom, and achieving more than 400 victories in all 50 states. Donate today to help support our mission.

We Protect Your Rights

Our attorneys defend individual rights and protect those who cannot protect themselves.

Need Help? Submit a case.